Face Recognition and Tracking with OpenCV and face_recognition (Part Two)

Here we are, again, ready to understand how to create a simple software to recognize faces.

In the previous article, we saw how to manage the webcam and handle videos and images in real time. Now, let’s try to understand how to extract features from images to use them as “signatures” for comparison purposes. For this, in this first example, we will use a python library that will simplify our life: face_recognition.

To use it, you simply need to install the library:

pip3 install face_recognition

and import it into our code:

import face_recognition

What do we need, then, to implement our classifier?

To recognize a face, just follow a few simple steps.

Load the image of the face you want to compare:

known_face=face_recognition.load_image_file("known_face.jpg")

Extract the information (features) from it that we will use for comparison:

known_face_features=face_recognition.face_encodings(known_face)[0]

Load the file of the unknown face to verify:

unknown_face=face_recognition.load_image_file("unknown_face.jpg")

Extract from it the information we will use for the comparison:

unknown_face_features=face_recognition.face_encodings(unknown_face)[0]

Compare the two faces:

comparison=face_recognition.compare_faces([unknown_face_features], known_face_features)

If the comparison is positive, the result will be True, otherwise the method will return False.

That’s it.

But how does the face_recognition library work? In general, face recognition is done through two possible approaches, which can be chosen as needed depending on the requirements and resources available.

Generally, when analyzing the image, you can choose the algorithm to use through the following method:

faces_in_img = face_recognition.face_locations(image,number_of_times_to_upsample=1,model='hog')

The face_location method allows us to identify faces within a photo, and through the model parameter, you can define the algorithm to use; it can take two values: hog (Histogram of Oriented Gradients) and cnn (Convolutional Neural Network).

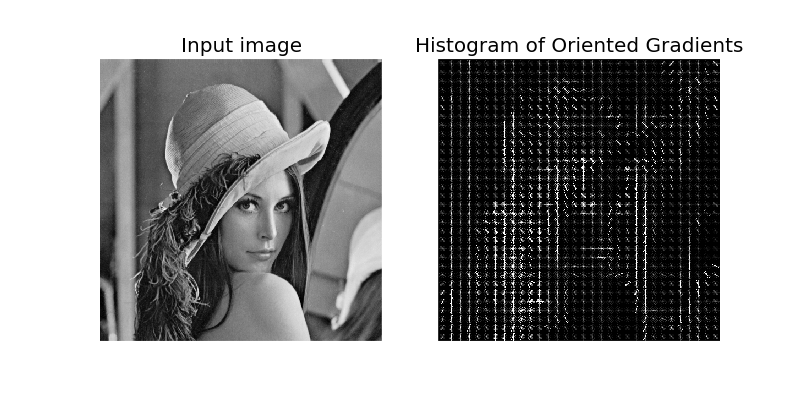

HOG (Histogram of Oriented Gradients)

It is an algorithm based on the calculation of oriented image gradients of the first order. The algorithms that implement this filter are engineered by hand and fixed, so they do not provide learning or adaptation mechanisms. The advantage of this approach is that few resources are used in the classification/comparison phase.

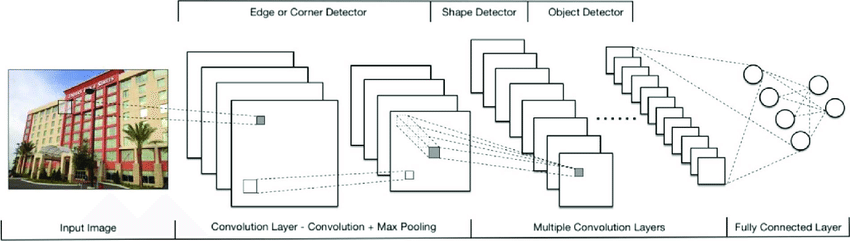

CNN (Convolutional Neural Network)

It is a convolutional neural network based on a hierarchical deep learning architecture. A CNN, therefore, is a neural network that operates on multiple levels that repeatedly filter the target image up to the classification phase. The filters are “trainable” and can therefore adapt during learning.

CNNs, therefore, can achieve high levels of accuracy because the various levels can be “trained” ad hoc and can learn low-level features similar to those expected in the HOG method, but they can do so autonomously.

The parameter number_of_times_to_upsample=1 allows us to decide how many times it is necessary to analyze the image to search for faces. The higher the number of samples, the greater the possibility of identifying smaller faces in the target image.

To conclude this article, below I leave a small example of how it is possible to analyze the frames of a real-time video captured by the webcam to perform face recognition on a video.

import cv2

import face_recognition

video = cv2.VideoCapture(0)

known_face=face_recognition.load_image_file("known_face.jpg")

known_face_features=face_recognition.face_encodings(known_face)[0]

while True:

#extract the individual frame from the video

ret, frame = video.read()

unknown_face_position_in_frame = face_recognition.face_locations(frame,model='hog')

#extract the features from the frames where the face is present

if len(unknown_face_position_in_frame) > 0 :

unknown_face_features_in_video = face_recognition.face_encodings(frame)[0]

#compare the face found in the frame with the reference face

comparison=face_recognition.compare_faces([unknown_face_features_in_video], known_face_features)

if comparison:

print ('Recognized')

else:

print ('Unknown')

else :

print ('Unknown')

I am passionate about technology and the many nuances of the IT world. Since my early university years, I have participated in significant Internet-related projects. Over the years, I have been involved in the startup, development, and management of several companies. In the early stages of my career, I worked as a consultant in the Italian IT sector, actively participating in national and international projects for companies such as Ericsson, Telecom, Tin.it, Accenture, Tiscali, and CNR. Since 2010, I have been involved in startups through one of my companies, Techintouch S.r.l. Thanks to the collaboration with Digital Magics SpA, of which I am a partner in Campania, I support and accelerate local businesses.

Currently, I hold the positions of:

CTO at MareGroup

CTO at Innoida

Co-CEO at Techintouch s.r.l.

Board member at StepFund GP SA

A manager and entrepreneur since 2000, I have been:

CEO and founder of Eclettica S.r.l., a company specializing in software development and System Integration

Partner for Campania at Digital Magics S.p.A.

CTO and co-founder of Nexsoft S.p.A, a company specializing in IT service consulting and System Integration solution development

CTO of ITsys S.r.l., a company specializing in IT system management, where I actively participated in the startup phase.

I have always been a dreamer, curious about new things, and in search of “new worlds to explore.”

Comments